By: Camila Key Saruhashi and Leland Chamlin

Opening

For decades, America’s foundational industries — manufacturing, logistics, energy, and defense — have faced chronic underinvestment. While software, mobile, and cloud computing transformed media, finance, and commerce, the critical infrastructure driving our economy was left behind. As production offshored, domestic capabilities eroded:

- U.S. manufacturing jobs fell by 34% in the 2000s.

- Industrial startups dropped from 12% of new company formations in the 1990s to less than 5% today.

The result is a fragile, outdated system struggling to keep pace with modern economic demands. Recent global shocks — trade wars, geopolitical instability, labor shortages, and climate disruptions — have further exposed these vulnerabilities. The urgency to modernize is clear:

- 86% of manufacturers are restructuring supply chains to nearshore or onshore production.

- Trillions of dollars in industrial spend are now in play, creating an unprecedented opportunity for transformation.

At Construct, we see a rare, once-in-a-generation opportunity born from these challenges. While the need spans all foundational sectors, this piece focuses specifically on manufacturing — where the urgency is great and the potential for AI-driven transformation is clear. We believe AI will enable us to leapfrog decades of stagnation and offshoring, and catalyze a new era of productivity and efficiency — one where foundational industries once again form the bedrock of growth, prosperity, and innovation.

Sense-Think-Act Framework

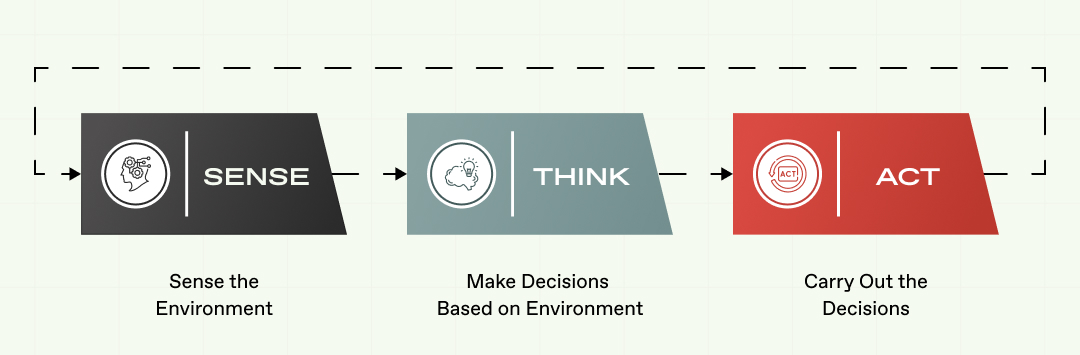

Intelligent systems, like humans and animals, operate in three stages: Sense, Think, Act. This robotics framework helps us map how AI is transforming industrial autonomy.

- Sense – Collect and process real-time data.

- Think – Analyze and interpret data for decision-making.

- Act – Execute decisions autonomously.

Historically, automation has been fragmented, with gaps in data integration, decision-making, and execution. AI is now closing these gaps — paving the way for greater autonomy and efficiency in industrial systems.

Sense

Definition: The "Sense" stage is the first phase in the data lifecycle, where information is collected, processed, and prepared for decision-making in the "Think" phase.

Challenges: Over the past two decades, industrial IoT (IIoT) sensors and computer vision systems have revolutionized data acquisition. Key developments include:

- Hardware Cost Reduction: The average cost of an IoT sensor has dropped nearly fourfold, from $1.30 in 2004 to $0.38 in 2020. Similarly, camera modules that once cost over $1,000 in 1995 are now produced for as little as $10.

- Cloud Adoption: 64% of industrial firms now operate in the cloud, up from just 13% a decade ago.

- Connectivity Improvements: Faster and more reliable networks — 5G, LPWANs, and satellite connections — have enabled real-time data flow at scale.

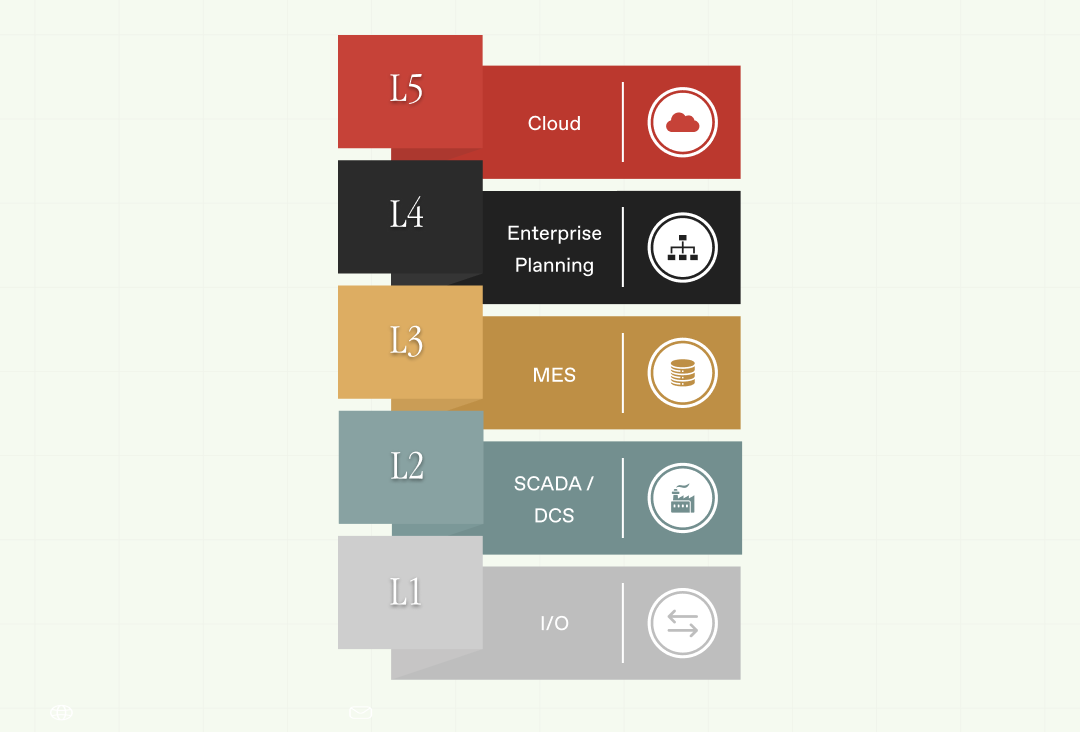

Despite these advancements, data in industrial environments remains highly fragmented, limiting its ability to power AI-driven automation. Traditional manufacturing data architecture follows a hierarchical model, where information moves through multiple layers before becoming actionable:

- Level 1: Sensors and Programmable Logic Controllers (PLCs) collect raw machine data.

- Level 2-3: Systems like SCADA and Manufacturing Execution System (MES) process and refine data for real-time control.

- Level 4-5: Enterprise Resource Planning (ERP) platforms aggregate and interpret data for business decision-making and cloud integration

While this structure helps organize data flow, it introduces critical bottlenecks:

- Siloed and Inaccessible Data: IIoT devices generate massive amounts of machine data, but much of it remains trapped within legacy systems, spreadsheets, or proprietary software, making it difficult to access in real time.

- Loss of Granularity: As data moves up the stack, resolution and fidelity decrease, reducing its usefulness for AI-driven decision-making and predictive analytics.

- Limited Interoperability: Rigid data architectures struggle to integrate with new AI and automation technologies, slowing down digital transformation efforts.

The Opportunity: Unified Namespace (UNS)

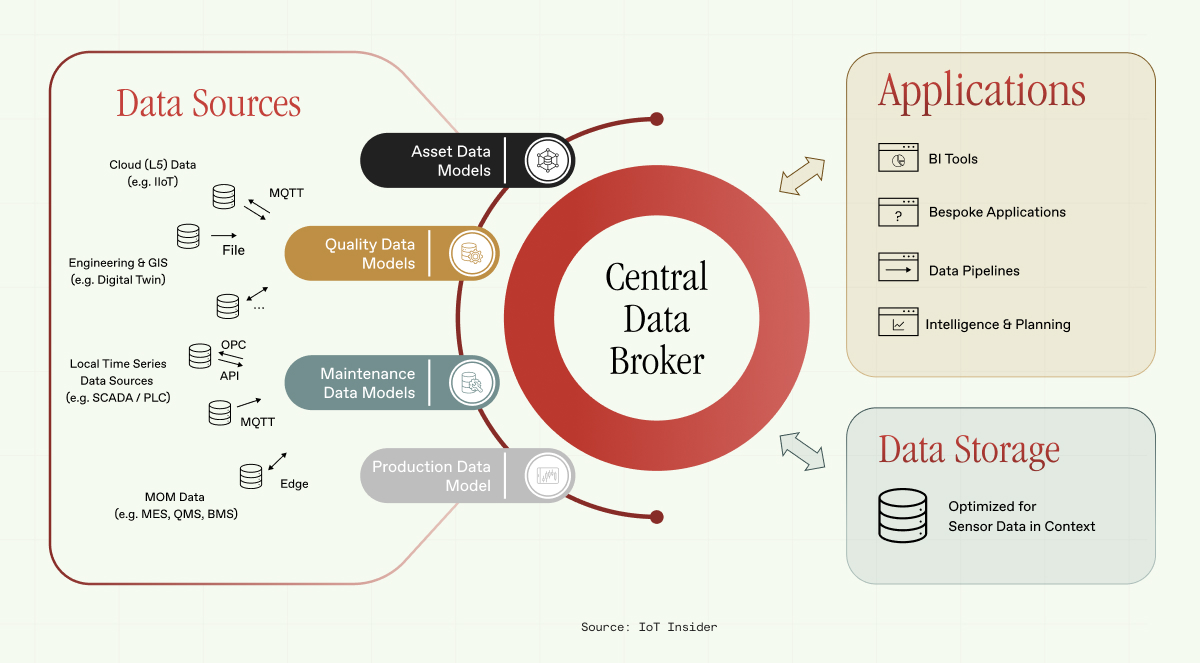

To break these silos, AI is enabling seamless data interoperability through advanced translation layers and standardized APIs. These AI-powered layers act as intelligent intermediaries, ensuring that diverse data formats and communication protocols — OPC UA, MQTT, RESTful APIs — are normalized and structured for immediate use.

At the core of this transformation is the Unified Namespace (UNS) — a modern data architecture that replaces traditional hierarchical structures with a real-time, centralized data broker. Operating on a publish-subscribe model, UNS allows:

- Industrial assets (sensors, machines, and IIoT devices) to publish real-time data.

- Applications and AI agents subscribe to relevant data streams, ensuring continuous, synchronized access across all systems.

By collapsing the traditional manufacturing stack, UNS integrates raw, cleaned, and analysis-ready data into a single, unified system. AI enhances this ecosystem by structuring and synchronizing data across every level of the manufacturing process, improving accuracy, scalability, and automation-readiness.

Within the “Sense” stage, we are particularly excited about companies building new platforms for data integration and composability, unifying IT, OT, and engineering data across all layers of the stack. One example is our portfolio company Copia, which is building Git-based version control and collaboration for industrial devops.

Think

Definition: The "Think" stage is where raw data — collected and processed in the "Sense" stage — is transformed into actionable insights.

Challenges: Historically, decision-making in foundational industries has relied on two primary methods:

- Experience & Intuition: Skilled machinists and operators made decisions based on years of expertise, but with growing labor shortages and an aging workforce, this approach is becoming unsustainable.

- Software-Based Insights: Software adoption has been slow in industrial settings, often limited to backward-looking systems of record that require manual interpretation. These systems provide historical insights but lack real-time decision-making.

The Opportunity: AI is poised to bridge the gap between “Think” and “Act” by enabling real-time, autonomous decision-making. This shift is so transformative that "Think" and "Act" are beginning to merge.

For example, we are already seeing Agentic AI transform the factory from a system of intelligence to a system of action.

Unlike traditional industrial software (e.g., CMMS, MES) that assist human decision-making, AI-first systems autonomously reason, decide, and act — eliminating human delays in execution.

Key Enablers of Agentic AI:

- Autonomous Decision-Making & Execution – Advanced models like OpenAI’s o1 leverage real-time reasoning and function calling, allowing AI to interact with external tools, databases, and APIs without human oversight.

- Workflow Orchestration – Frameworks like LangChain connect AI with APIs, databases, and memory modules, enabling it to go beyond static analysis and take real-world actions.

- Structured Reasoning – Chain-of-Thought (CoT) reasoning enhances AI’s ability to break down complex problems, ensuring transparent, reliable, and scalable decision-making.

By eliminating execution bottlenecks, AI is redefining industrial operations, enabling autonomous, self-optimizing systems that drive unprecedented speed, efficiency, and resilience. This transformation goes far beyond predictive analytics — it enables real-time, AI-driven action.

Imagine a factory where machines don’t just predict failures — they autonomously respond. AI detects a deteriorating motor, cross-checks spare part availability in the ERP, generates a purchase order, and schedules a technician — all before a breakdown occurs. This shift from reactive to proactive, AI-driven automation is the foundation of the Factory of the Future — where industrial systems operate with minimal human intervention and maximum efficiency. We’re investing in the founders and companies turning this vision into reality.

We have a long track record of investing and supporting early stage companies in the 'Think' phase. Today, we are excited about businesses employing agents to unify a long tail of factory level data to solve for a variety of manufacturing challenges, from enhancing quality control and defect monitoring, to workplace safety monitoring, labor orchestration, resource allocation, and end-to-end scenario analysis.

Act

Definition: The "Act" stage is where insights are translated into tangible actions.

The Opportunity: Act in the physical world: Physical AI

While Agentic AI is transforming digital execution, Physical AI — powered by World Foundation Models (WFMs) — is revolutionizing robotics and real-world automation.

WFMs represent a new class of foundation models, distinct from Large Language Models (LLMs) like OpenAI’s GPT. While LLMs process and generate language, WFMs integrate multimodal data — including video, sensor inputs, and spatial information — to create high-fidelity, physics-based simulations. These simulations allow AI to predict real-world outcomes, optimize robotic behaviors, and refine control systems before deployment, ensuring machines operate safely and efficiently in complex environments.

A key breakthrough enabling these simulations is Physics-Informed Neural Networks (PINNs). Unlike traditional neural networks that rely solely on data, PINNs embed fundamental physical laws — such as fluid dynamics and structural mechanics — directly into AI architectures. This ensures that AI-driven models don’t just learn from historical data but also adhere to real-world physics, making them:

- Precise – Delivering more accurate predictions, even with limited real-world data.

- Interpretable – Producing AI decisions that align with real-world physics.

- Reliable – Maintaining robustness even in noisy or unpredictable environments.

What This Means for Robotics

WFMs, powered by physics-aware AI, are transforming robotics—from autonomous vehicles and industrial automation to robotic surgery. By creating high-fidelity, physics-driven simulations, WFMs enhance robot training, automation, and decision-making, bridging the Sim2Real (Simulation to Reality) gap so AI systems can operate reliably in real-world environments.

A key challenge in robotics is learning efficient, safe, and adaptable behaviors without relying on costly, slow, and unsafe real-world trial-and-error. WFMs solve this by accelerating AI learning techniques:

- Reinforcement Learning (RL): Embeds physics-aware reward functions, enabling robots to develop adaptive behaviors faster and with greater accuracy.

- Imitation Learning (IL): Allows robots to learn from human demonstrations while respecting real-world constraints for safer, more natural interactions.

- Self-Supervised & Model-Based Learning: Trains robots in physics-informed simulations, reducing reliance on extensive real-world datasets.

- Multi-Agent Collaboration: Enhances coordination between robots, humans, and autonomous systems in dynamic industrial environments.

By embedding physics-based intelligence into AI, WFMs eliminate many of the bottlenecks in robotic learning, enabling smarter, safer, and more adaptable automation at scale.

We are particularly excited about companies that are using AI to bridge the physical and digital divide in a manufacturing setting. On the software side, this may range from efforts to automate the design and modeling of complex industrial systems like aerospace, energy generation, and automotive, to efforts to more seamlessly optimize engineering workflows for Design for Manufacturability (DFM). We have a long history of expertise in this area, with pioneers like Onshape (acquired by PTC).

On the hardware side, we are increasingly excited by self learning robotic systems, with a technological advantage across several key vectors — systems integration, material handling, task management. We have seen momentum across a number of key verticals, such as in food production with our portfolio company Chef, as well as areas like warehouse automation, construction, and subtractive manufacturing.

Closing

We've explored how AI is transforming Sense, Think, and Act to drive autonomy in foundational industries like manufacturing. In Part 2, we’ll dive into the Factory of the Future — where AI redefines the roles of humans and machines, creating a fully integrated, autonomous manufacturing ecosystem.